Research Data Management - An Online Introduction

Bölüm anahatları

-

Publisher: HeFDI - Hessian Research Data Infrastructures

Authors (in alphabetical sequence): Arnela Balic (Frankfurt University of Applied Sciences), Muriel Imhof (Philipps-Universität Marburg), Sabrina Jordan (Universität Kassel), Esther Krähwinkel (Philipps-Universität Marburg), Patrick Langner (Hochschule Fulda), Andre Pietsch (Justus-Liebig-Universität Gießen), Robert Werth (Frankfurt University of Applied Sciences)

Acknowledgement: We would like to thank Stefanie Blum and Marion Elzner of Geisenheim University of Applied Sciences for their collaboration as well as the colleagues of the Thuringian Competence Network Research Data Management, the WG Prof. Goesmann "Bioinformatics and Systems Biology" (University of Giessen) and Dr. Reinhard Gerhold (University of Kassel) for their valuable feedback.

Last modified: 21.02.2025

Contact: forschungsdaten@fit.fra-uas.de -

Requirements: No previous knowledge is required for this learning module. The chapters are thematically based on one another, but can also be worked on individually. If information from other chapters is required, these are linked locally.

Target Audience: Students, doctoral candidates and researchers who are looking for a first introduction to research data management.

Learning Objective: After completing this chapter, you will be able to understand and implement the content and meaning of research data management. The learning objectives in detail are prefixed to the respective chapters.

Content: If you prefer reading, you can access only the content of this learning unit through the text. However, the inserted videos offer further access to the respective topic, so that those who work well with video explanations can see the content presented in a different way.

Average completion time (with videos): 3 hours 35 minutes

Average processing time (without videos): 2 hours 10 minutes

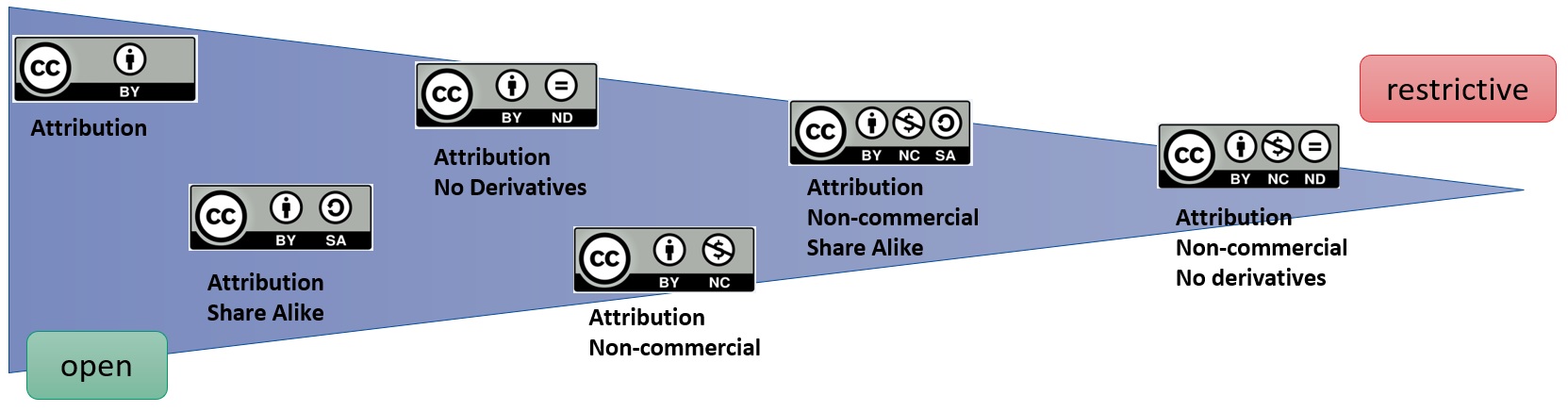

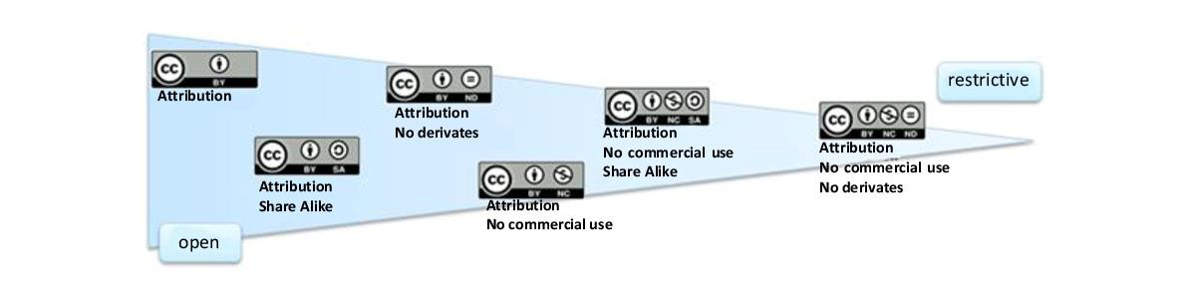

Licensing: This module is licensed under Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0). If you would like to repurpose the learning module, please feel free to write to hefdi@uni-marburg.de so that we can provide you with the latest version of the learning module.

Privacy (embedded videos): In this learning module, videos from YouTube are embedded on the following pages. When accessed, Google/YouTube uses cookies and other data, processes them and, if necessary, passes them on. Information about data protection and terms of use of the service can be found here. The use of the learning module requires appropriate consent.

-

Literature

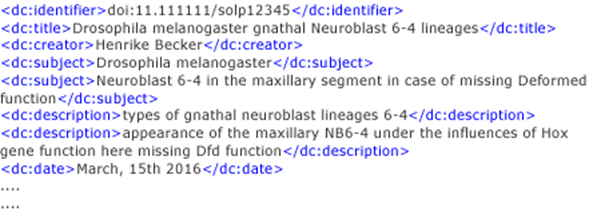

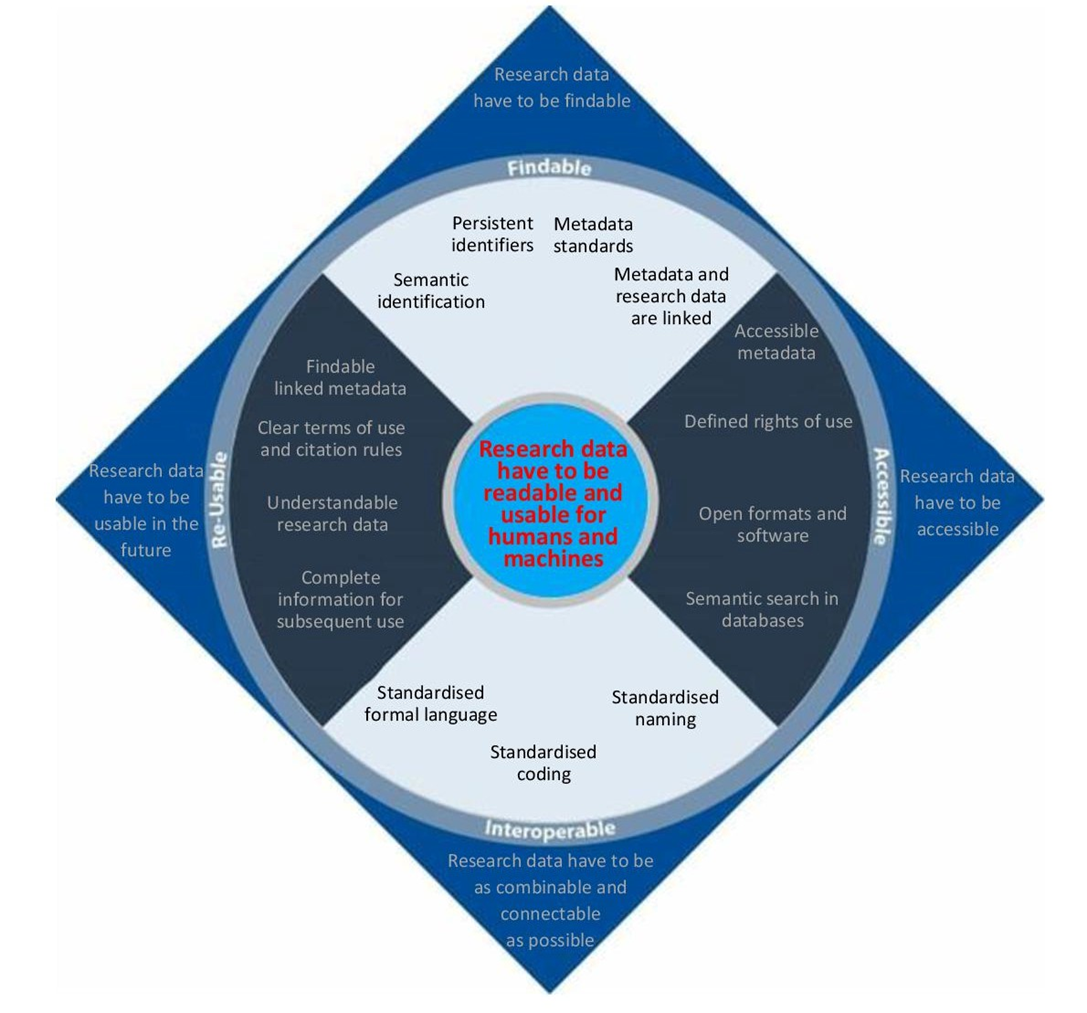

Wilkinson, M. D. et al [2016]: The FAIR Guiding Principles for Scientific Data Management and Stewardship. In Scientific Data, 3, article 160018. https://doi.org/10.1038/sdata.2016.18 The original article on the FAIR principles, which have become probably the most important tool in the field of research data management for assessing the goodness of research data. They are part of the absolute basic knowledge in the field of research data management. All major funders require projects to ensure that the data generated in these projects comply with the FAIR principles. The FAIR principles are also an important part of the guidelines of the DFG code mentioned above. If there is no time to read the article, the FAIR principles can also be found on the pages of GO FAIR.

Open educational resources

Playlist of educational videos regarding research data management from RWTH Aachen An introductory video series on research data management at RWTH Aachen University in German and English, based on a specific fictional research project.

Websites

Council for Information Infrastructures (RfII) The Council for Information Infrastructures (Ger: Rat für Infomationsinfrastrukturen; RfII) is a committee of experts appointed by the Joint Science Conference (Ger: Gemeinsame Wissenschaftskonferenz; GWK), which regularly publishes reports, recommendations and position papers and, as an expert committee, also advises politicians and scientists on strategic issues relating to the future of digital science. For those who understand German, we particularly recommend the weekly mail service with up-to-date information on the topic of "research data management".

European Open Science Cloud (EOSC) The goal of the European Open Science Cloud (EOSC) is to provide European researchers, innovators, businesses, and citizens with a federated and open multidisciplinary environment in which they can publish, find, and reuse data, tools, and services for research, innovation, and education purposes. The EOSC is recognized by the Council of the European Union as a pilot project to deepen the new European Research Area (EFR). It is also referred to as the Science, Research and Innovation Data Space, which will be fully linked to the other sectoral data spaces defined in the European Data Strategy. The website linked here is the metaportal of the European Union, which aims to bundle the European services for making research data available.

forschungsdaten.info

Information portal for research data management focussing on German particularities. For an international perspective, see e.g. the UK Data Service’s Learning Hub (https://ukdataservice.ac.uk/learning-hub/).Hessian Research Data Infrastructures (HeFDI)

HeFDI is the Hessian state initiative for the development of research data infrastructures, in which all Hessian universities are involved. The state initiative intends to initiate and coordinate the necessary organisational and technological processes to anchor research data management at the participating universities. This includes not only a technical offering, e.g. in the form of a repository, but also counselling and other services such as regular training courses.Local Research Data Management Service Provider at Frankfurt University of Applied Sciences

This is the website of the central service centre for research data management at Frankfurt University of Applied Sciences (Frankfurt UAS). Here you will find the contact information of the research data team as well as FAQs on the topic of "research data management", which will hopefully answer some of your questions about research data management.National Research Data Infrastructure Germany (NFDI) The National Research Data Infrastructure (NFDI) is the largest German research data infrastructure project which intends to promote the development of a data culture and infrastructure based on the FAIR principles via the so-called NFDI consortia (associations of different institutions within a research field) in order to systematically open up and network valuable data stocks from science and research for the entire German science system to make them usable in a sustainable and qualitative manner.

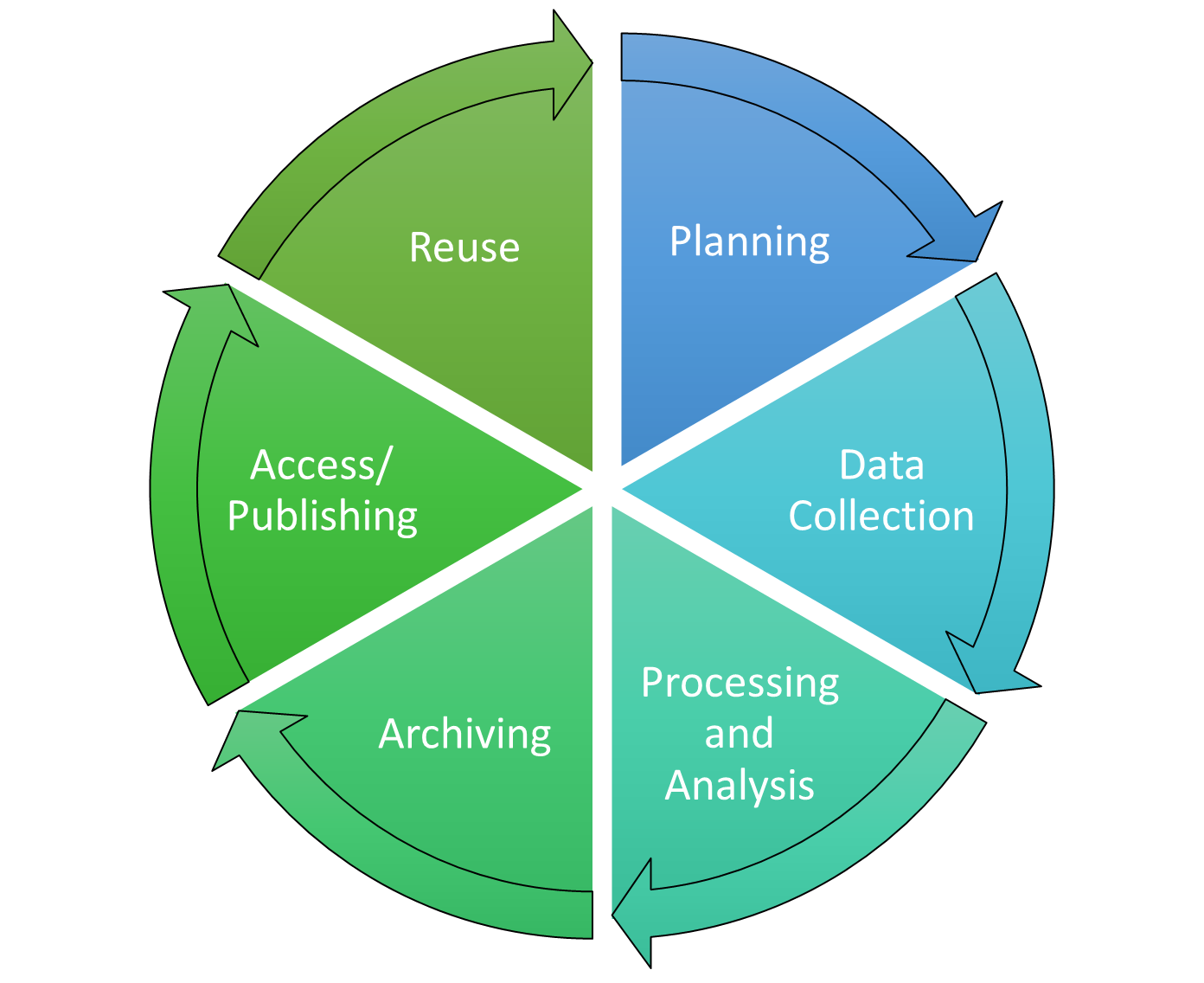

Research Data Alliance (RDA) The Research Data Alliance (RDA) was launched in 2013 as a community-driven initiative by the European Commission, the National Science Foundation, and the U.S. Government's National Institute of Standards and Technology, and the Australian Government's Department of Innovation, with the goal of building a social and technical infrastructure that enables the open sharing and reuse of data. The RDA takes a grassroots, integrative approach that covers all phases of the data lifecycle, involves data producers, users, and managers, and addresses data sharing, processing, and storage. It has succeeded in creating a neutral social platform where international research data experts meet to exchange ideas and agree on topics such as social barriers to data sharing, education and training challenges, data management plans and certification of data repositories, disciplinary and interdisciplinary interoperability, and technological issues.

-

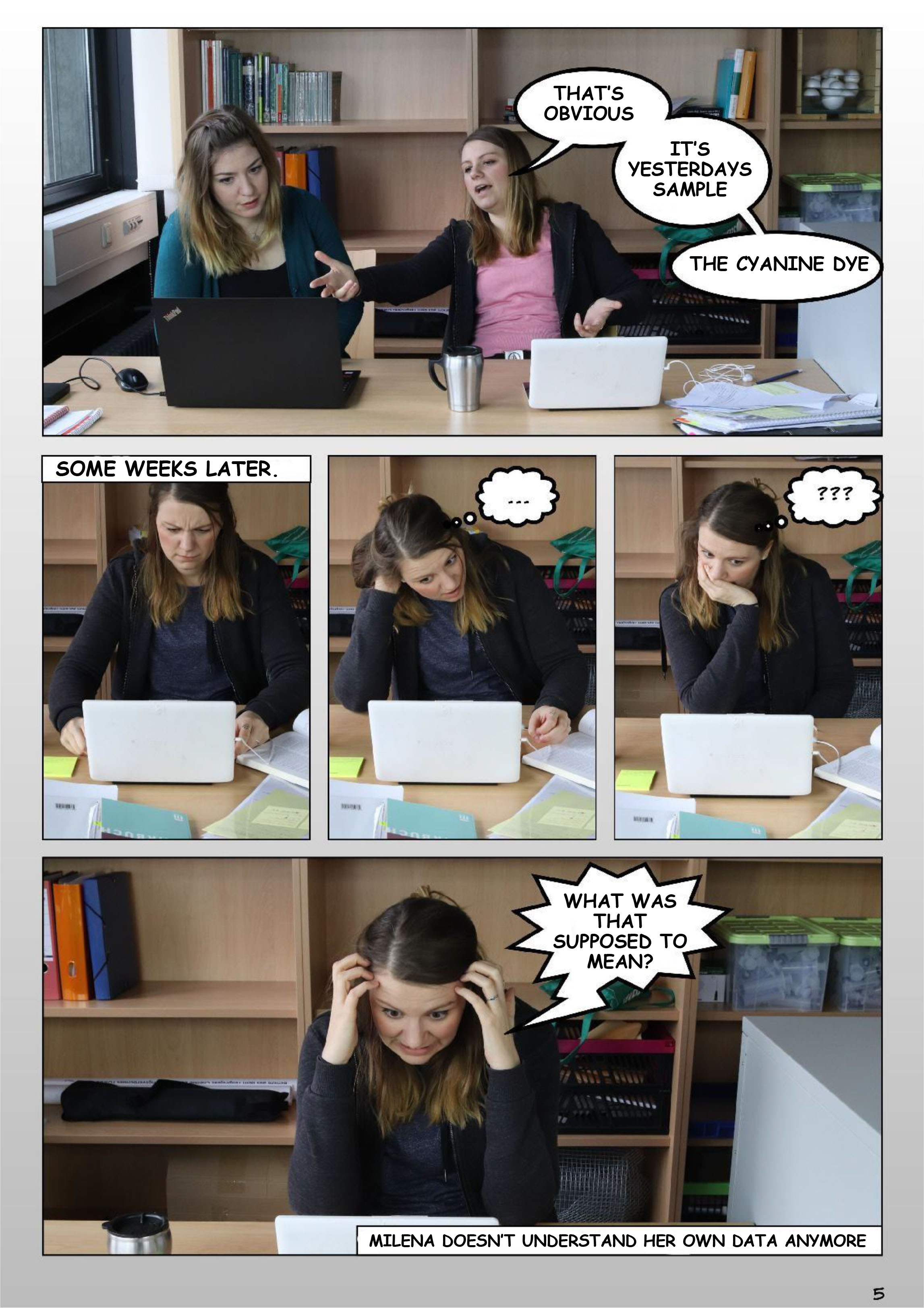

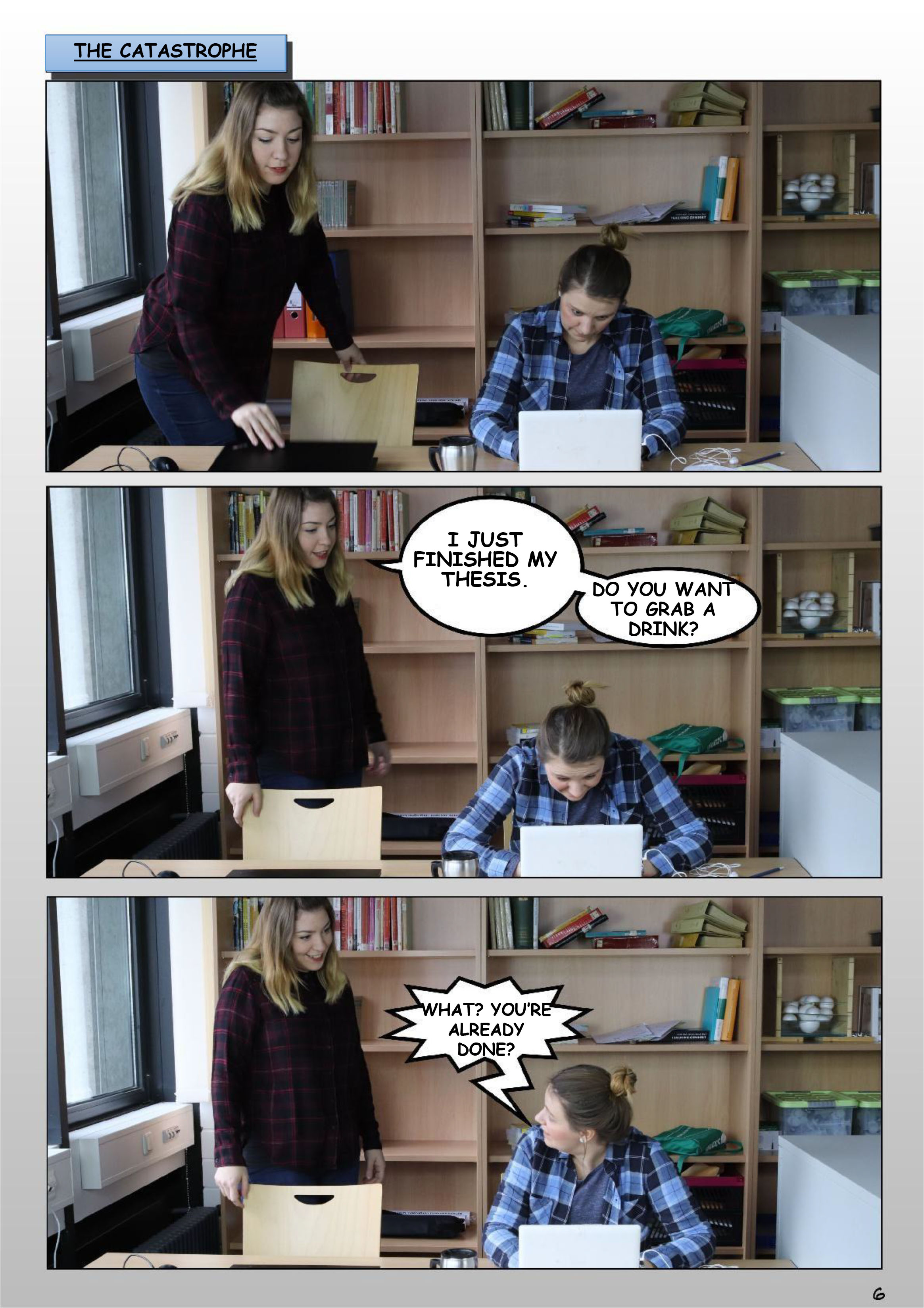

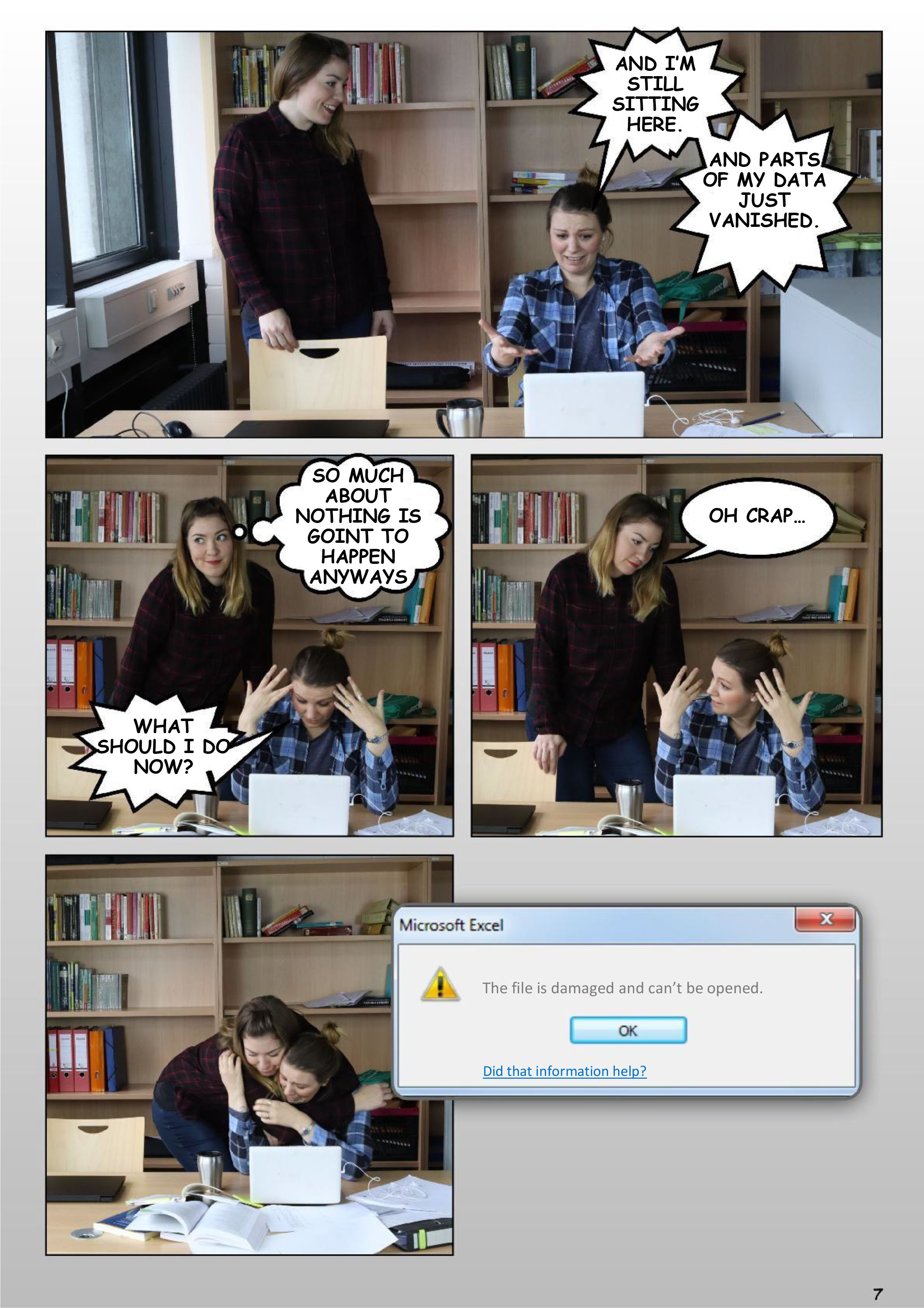

1.1: Stress, stress go away. Derivative version of: Stress lass nach – Eine Bildergeschichte zum Forschungsdatenmanagement. Created by Julia Werthmüller and Tatjana Jesserich, project FOKUS (Forschungsdatenkurse für Graduierte und Studierte), 2019. CC BY-SA 4.0 Funded by BMBF 2017-2019.

Source: Becker, Henrike, Einwächter, Sophie, Klein, Benedikt, Krähwinkel, Esther, Mehl, Sebastian, Müller, Janine, Werthmüller, Julia. (2019). Lernmodul Forschungsdatenmanagement auf einen Blick – eine Online-Einführung. Zenodo. https://doi.org/10.5281/zenodo.3381956

-

processing time: 15 minutes, 36 seconds

-

Test your knowledge about the content of the chapter

-

Here is a summary with the most important facts.

-

processing time: 9 minutes, 45 seconds

-

Test your knowledge about the content of the chapter !

-

Here is a summary of the most important facts.

-

processing time: 11 minutes, 12 seconds

processing time (without video): 5 minutes, 53 seconds-

Test your knowledge about the content of this chapter!

-

A summary of the most important facts.

-

Bearbeitungsdauer: 24 Minuten, 12 Sekunden

-

Test your knowledge about the content of the chapter !

-

Here is a summary about the most important facts

-

processing time: 14 minutes, 4 seconds

-

Test your knowledge about the content of the chapter !

-

Here is a summary of the most important facts

-

processing time: 13 minutes, 47 seconds

processing time (without video): 10 minutes, 35 seconds-

Test your knowledge about the content of the chapter!

-

Here is a summary of the most important facts

-

processing time: 21 minutes, 46 seconds

processing time (without video): 11 minutes, 53 seconds-

Test your knowledge about the content of the chapter !

-

Here is a summary of the most important facts

-

processing time: 18 minutes, 3 seconds

-

Test your knowledge about the content of the chapter !

-

Here is a summary of the most important facts

-

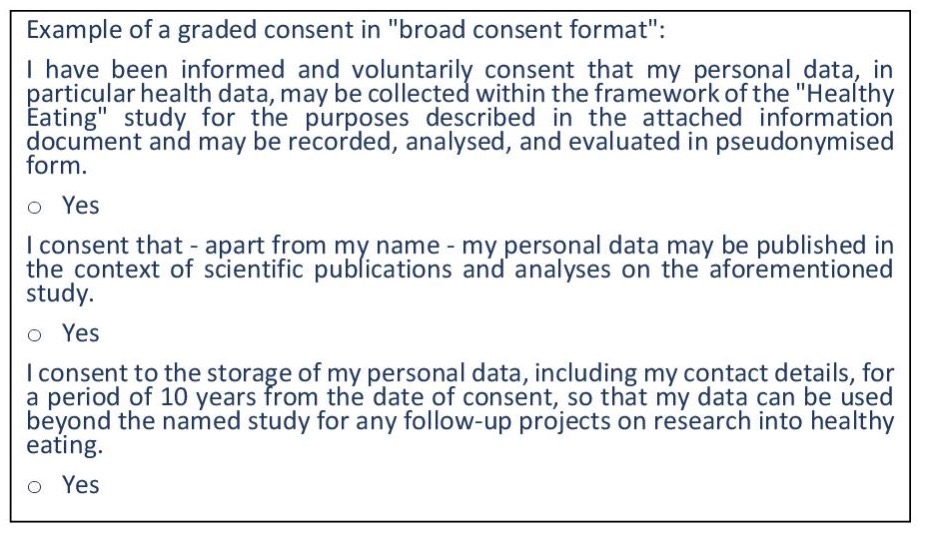

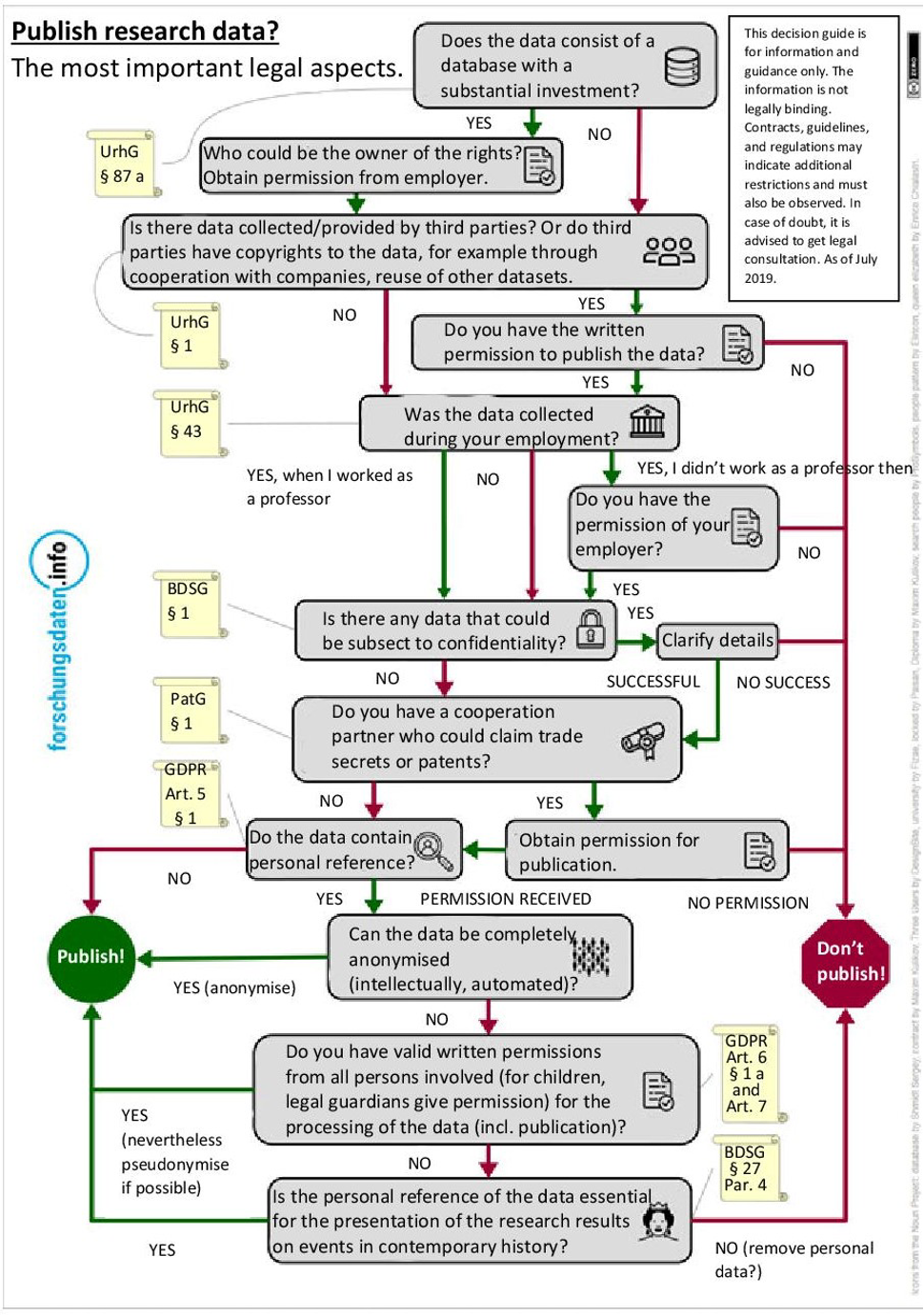

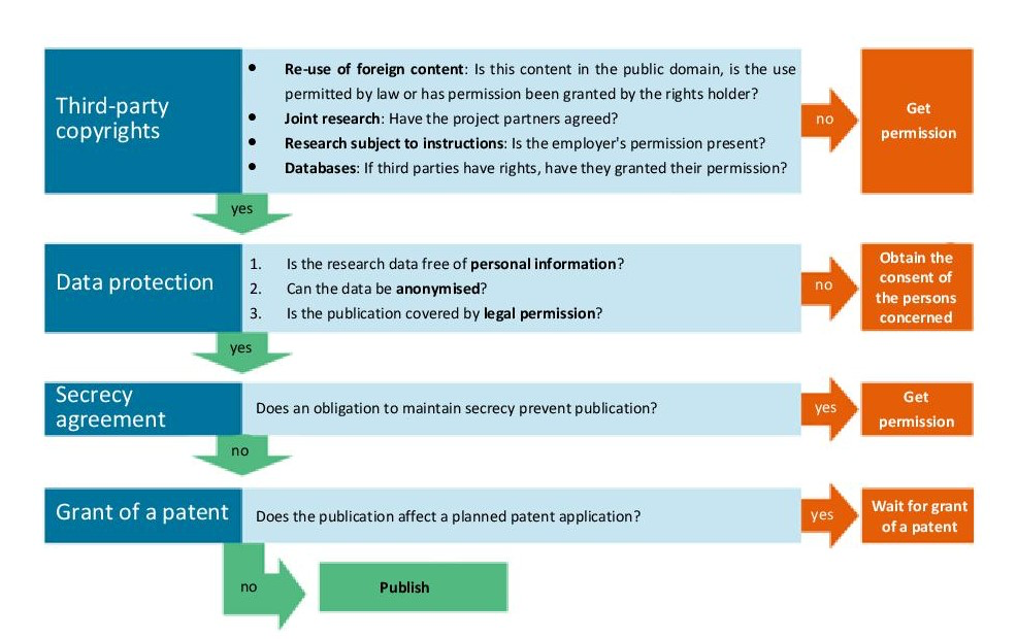

Disclaimer: No legally binding information! For specific legal advice on your research, please contact the legal department or the data protection officer of the university (dsb@fra-uas.de).

processing time: 67 minutes, 10 seconds

processing time (without video): 20 minutes, 36 seconds-

Test your knowledge about the content of this chapter !

-

Here is a summary of the most important facts