8.6 Data archiving

Besides data storage, data archiving is another necessary step in the research data life cycle. While data storage primarily involves the storage of data during the ongoing work process in the project period, as covered in the previous sections of this chapter, data archiving is concerned with how the data can be made available in as reusable a way as possible after the project has been completed. Often a distinction is made between data storage in a repository and data archiving in the sense of long-term archiving (LTA for short). However, in many places, including the DFG's “Guidelines for Safeguarding Good Research Practice” from 2019 (“Guideline 17: Archiving”), both terms are used equivalently. When we speak of preservation or data retention in the following, we mean the storage of data in a research data repository. However, when data archiving is mentioned, long-term archiving is meant. The differences between the two variants are the subject of this section.

Data storage in a research data repository is usually accompanied by publication of the data produced. Access to such publications can and, in the case of sensitive data such as personal data, must be restricted. In accordance with good scientific practice, repositories must ensure that the published research data are stored and made available for at least ten years, after which time availability is no longer necessarily guaranteed, but is nevertheless usually continued. If data are removed from the repository after this minimum retention period at the decision of the operator, the reference to the metadata must remain available. Repositories are usually divided into three different types: Institutional repositories, subject repositories and interdisciplinary or generic repositories. A fourth, more specific variant are so-called software repositories, in which software or pure software code can be published. These are usually designed for one programming language at a time (e.g. PyPI for the programming language “Python”).

Institutional repositories include all those repositories that are provided by mostly state-recognised institutions. These may include universities, museums, research institutions or other institutions that have an interest in making research results or other documents of scientific importance available to the public. As part of the DFG's “Guidelines for Safeguarding Good Research Practice” (2019), there is an official requirement that the research data on which a scientific work is based must be kept at least “at the institution where the data were produced or in cross-location repositories”. (DFG 2019, p. 20) Also, before publishing your data, be aware of the requirements for long-term storage imposed by your research institution's research data guideline or research data policy. Therefore, contact the research data officer at your university or research institution early on to discuss how and where you can publish the data in order to act in accordance with good scientific practice. Even if you have already published your data in a journal, it is often possible to publish it at your institution as well. Ask the publisher or check your contract.

In addition to publishing in your institutional repository, you can also publish your data in a subject-specific repository. Publishing in a renowned subject-specific repository in particular can greatly contribute to enhancing your scientific reputation. To find out whether a suitable subject-specific repository is available for your research area, it is worthwhile to search via the repository index re3data.

If there is no suitable repository, the last option is to publish in a large interdisciplinary repository. A free option is offered on the one hand by the service Zenodo, funded by the European Commission, and on the other hand internationally by figshare. If your university is a member of Dryad, you can also publish there, free of charge. RADAR offers a fee-based service for publishing data in Germany. The most frequently used option in Europe is probably Zenodo. When publishing on Zenodo, make sure that you also assign your research data to one or more communities that in some way reflect a subject-specificity within this generic service.

Regardless of where you end up publishing your data, always make sure to include a descriptive “metadata file” in addition to the data, describing the data and setting out the context of the data collection (see Chapter 4). When choosing your preferred repository, also look to see if it is certified in any way (e.g. CoreTrustSeal). Whether a repository is certified can be checked at re3data.

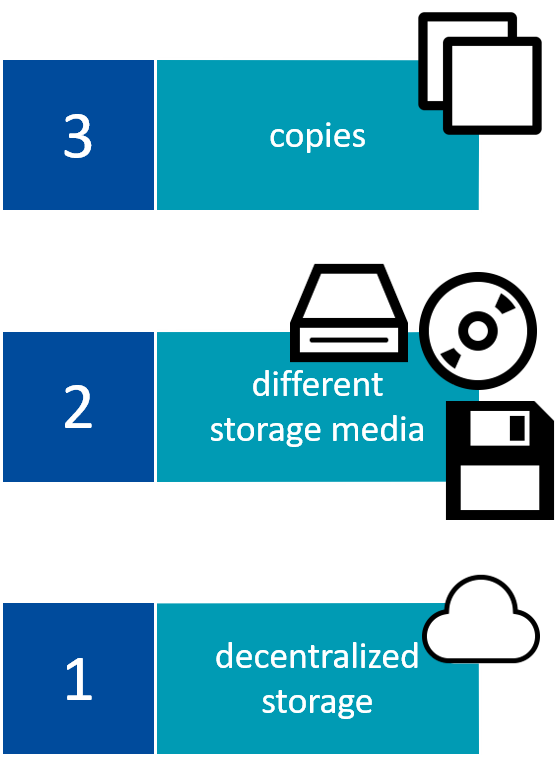

With today's rapidly evolving digital possibilities, the older data become, the more likely it is that this data can no longer be opened, read, or understood in the future. There are several reasons for this: The necessary hardware and/or software is missing, or scientific methods have changed so much that data is now collected in other ways with other parameters. Modern computers and notebooks, for example, now almost always do without a CD or DVD drive, which means that these storage media can no longer be widely used. Long-term archiving therefore aims to ensure the long-term use of data over an unspecified period of time beyond the limits of media wear and technical innovations. This includes both the provision of the technical infrastructure and organisational measures. In doing so, LTA pursues the preservation of the authenticity, integrity, accessibility, and comprehensibility of the data.

In order to enable long-term archiving of data, it is important that the data are provided with meta-information relevant to LTA, such as the collection method used, hardware of the system used to collect the data, software, coding, metadata standards including version, possibly a migration history, etc. (see Chapter 4). In addition, the datasets should comply with FAIR principles as far as possible (see Chapter 5). This includes storing data preferably in non-proprietary, openly documented data formats and avoiding proprietary data formats. Open formats need to be migrated less often and are characterised by a longer lifespan and higher dissemination. Also make sure that the files to be archived are unencrypted, patent-free and non-compressed. In principle, file formats can be converted lossless, lossy, or according to the meaning. Lossless conversion is usually preferable, as all information is retained. However, if smaller file sizes are preferred, information losses must often be accepted. For example, if you convert audio files such as WAV to MP3, information is lost through compression and the sound quality decreases. However, the conversion results in a smaller file size. The following table gives a first basic overview of which formats are suitable and which are rather unsuitable for a certain data type:

Tab. 8.3: Recommended and non-recommended data formats by file type

The listing in the column “less suitable or unsuitable formats” does not mean that you cannot use these formats at all if you want to store your data in the long term. It is rather a matter of being sensitised to questions of long-term availability in a first start. Make it clear which format offers which advantages and disadvantages. You can find an extended overview at forschungsdaten.info. If you want to delve further, you will find what you are looking for on the website of NESTOR – the German competence network for long-term archiving and long-term availability of digital resources. Under NESTOR - Topic you will find current short articles from the field, e.g. on tiff or pdf formats. If you put these and other overviews side by side, you will notice that the recommendations on file formats differ from each other. We do not yet have enough experience in this field. Another good way to find out if you are uncertain about formats is to ask a specialised data centre or a research data network, if one exists. If you want to store your data there, this approach is even more advisable. You may then find that your data will be taken even if the chosen data format is not the first choice from an LTA perspective. Operators of repositories or research data centres work close to science and always try to find a way of dealing with formats that are widely used in the respective fields, e.g. Excel files. As an example of this, you can take a look at the guidelines of the Association for Research Data Education.

In order to be able to decide for yourself which formats are suitable for your project, there are a number of criteria that you should consider when making your selection (according to Harvey/Weatherburn 2018: 131):

- Extent of dissemination of the data format

- Dependence on other technologies

- Public accessibility of the file format specifications

- Transparency of the file format

- Metadata support

- Reusability/Interoperability

- Robustness/complexity/profitability

- Stability

- Rights that can complicate data storage

LTA currently uses two strategies for long-term data preservation: emulation and migration.

Emulation means that on a current, modern system, an often older system is emulated, which imitates the old system in as many aspects as possible. Programmes that do this are called emulators. A prominent example of this is DOSBox, which makes it possible to emulate an old MS DOS system including almost all functionalities on current computers and thus to use software for this system, which is most likely no longer possible with a more current system.

Migration or data migration means the transfer of data to another system or another data carrier. In the area of LTA, the aim is to ensure that the data can still be read and viewed on the system to be transferred. For this, it is necessary that the data are not inseparably linked to the data carrier on which they were originally collected. Remember that metadata must also be migrated!

When choosing a suitable storage location for long-term archiving, you should consider the following points:

-

Technical requirements – The service provider should have a data conversion, migration and/or emulation strategy. In addition, a readability check of the files and a virus check should be carried out at regular intervals. All steps should be documented.

-

Seals for trustworthy long-term archives – Various seals have been developed to assess whether a long-term archive is trustworthy, e.g. the nestor seal, which was developed on the basis of DIN 31644 “Criteria for trustworthy digital long-term archives”, ISO 16363 or the CoreTrustSeal.

-

Costs – The operation of servers as well as the implementation of technical standards are associated with costs, which is why some service providers charge for their services. The price depends above all on the amount of data.

-

Making the data accessible – Before choosing the storage location, you should decide whether the data should be accessible or only stored.

-

Service provider longevity – Economic and political factors influence the longevity of service providers.

In summary, the following can be said: The information on LTA listed here has mainly a theoretical value for you and only a limited action value. If you publish in a certified repository, you are well advised. Above all, make sure that you do so at a trustworthy institution and obtain information from this institution in advance about possibilities or plans regarding an LTA. You can use the aspects listed here for a good LTA to formulate possible questions for the facilities. This should provide sufficient preconditions for the LTA.